SoftNeuro Ver.5.2.0 API Document Rev.1.0.0

1. About

This document is the SoftNeuro API reference, and provides some sample codes and supported layer names.

1.1. Target readers

This document is supposed to be read by software engineers with basic C and deep learning knowledge.

1.2. Related documents

Refer to "CLI Tool Reference Guide" for command line tool, and to "Python API document" for Python API details.

1.3. Quick links

- morapi_Dnn

- morapi_DnnProf

- morapi_DnnOptimiser

- morapi_DnnRecipe

- Dnn profiling example

- Dnn decomposing example

2. Technical overview

SoftNeuro is a high-performance deep learning inference engine with multiple backend support.

The SoftNeuro SDK is constituted by a Python module, an unified command line tool and this runtime library.

|

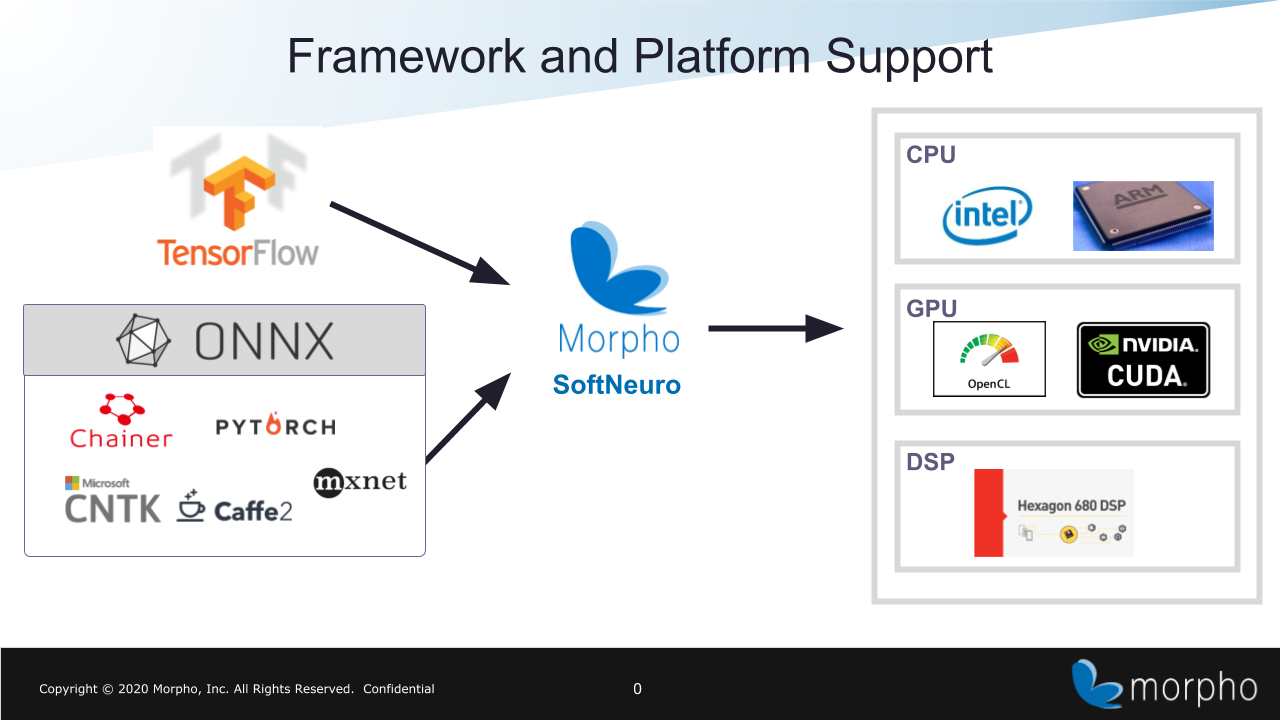

| Fig.1 SoftNeuro Overview |

The softneuro unified command line tool imports trained models from popular deep learning frameworks and converts them into SoftNeuro's dnn format.

The currently supported frameworks are TensorFlow and ONNX, which in turn supports Caffe2, Chainer, Microsoft Cognitive Toolkit, MXNet, PyTorch and PaddlePaddle.

Using the layer fusion technique the computational graph is optimized during the conversion process.

The command line tool can also be utilized in several other ways, such as: tuning a dnn model for the best performance on the target device; getting run time statistics; and encrypting files to diminish the possibility of leaking models.

Please refer to the "CLI Tool Reference Guide" for further information.

By supporting multiple backends such as Intel CPUs, ARM CPUs, Qualcomm DSPs (HNN), NVIDIA GPUs (CUDA) and other GPUs (OpenCL), SoftNeuro enables high-performance inference on any platform, any OS, and any hardware device.

There are plans to support even more backends in the future.

|

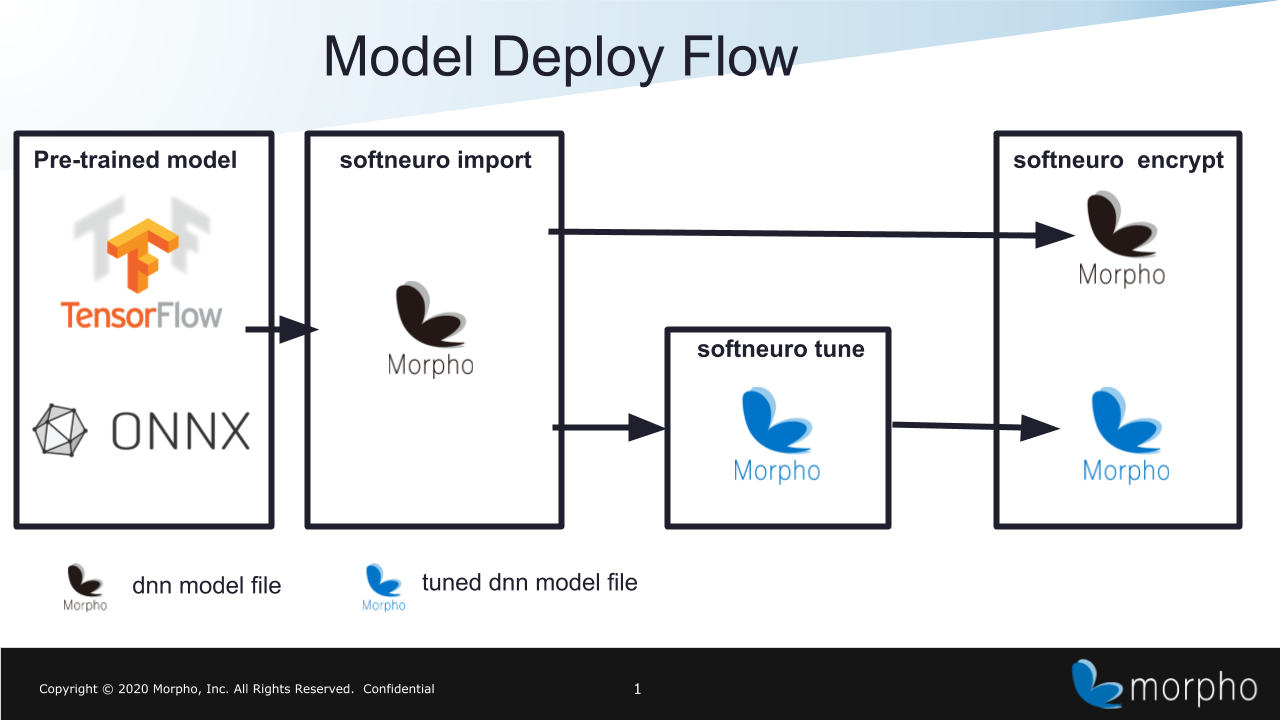

| Fig.2 Model Deploy Flow |

3. Specifications

3.1. System structure

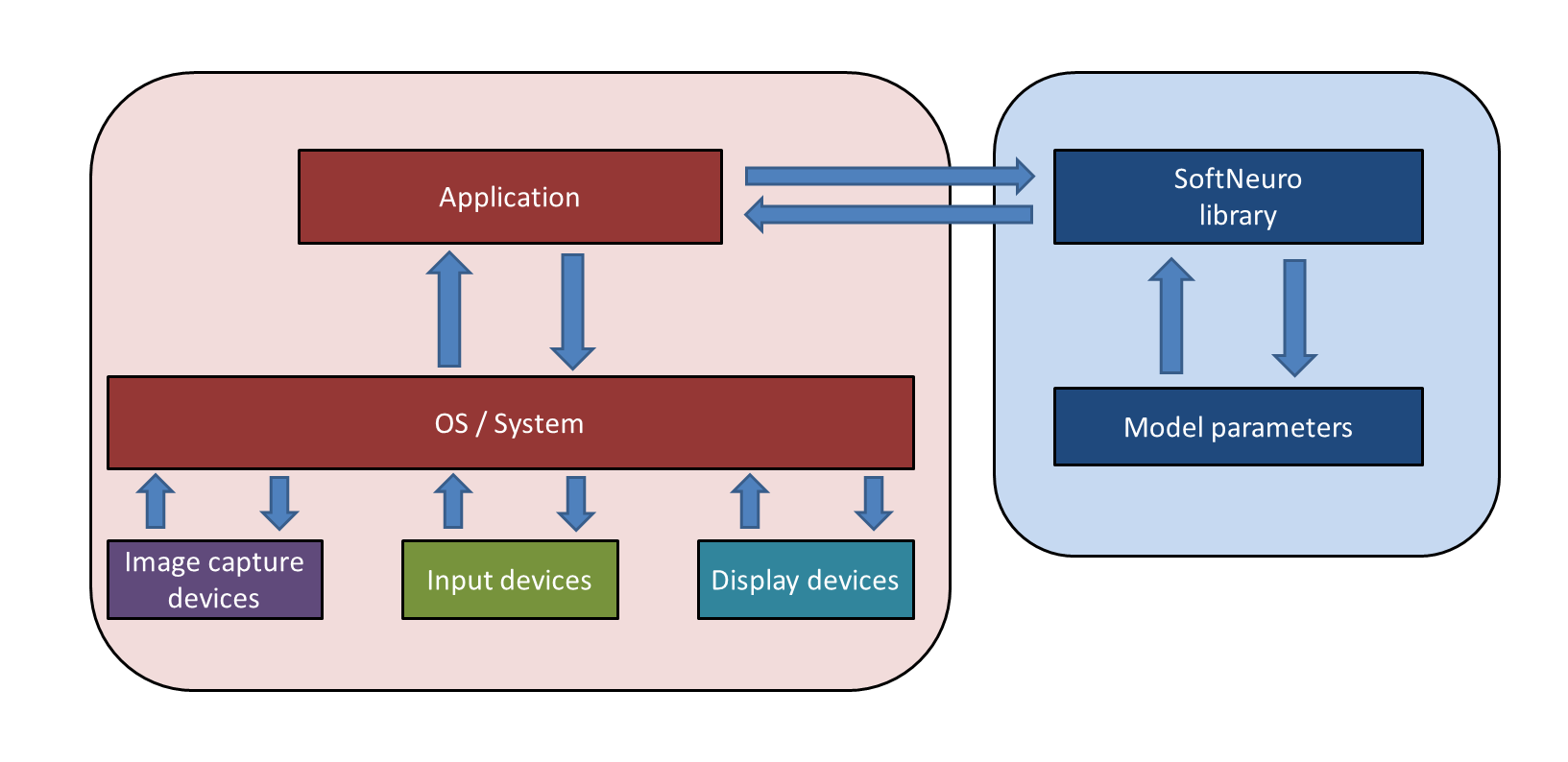

The following figure illustrates a structural example of a deep learning inference system that employs SoftNeuro.

|

| Fig.3 Structural Example |

3.2. Library process flow

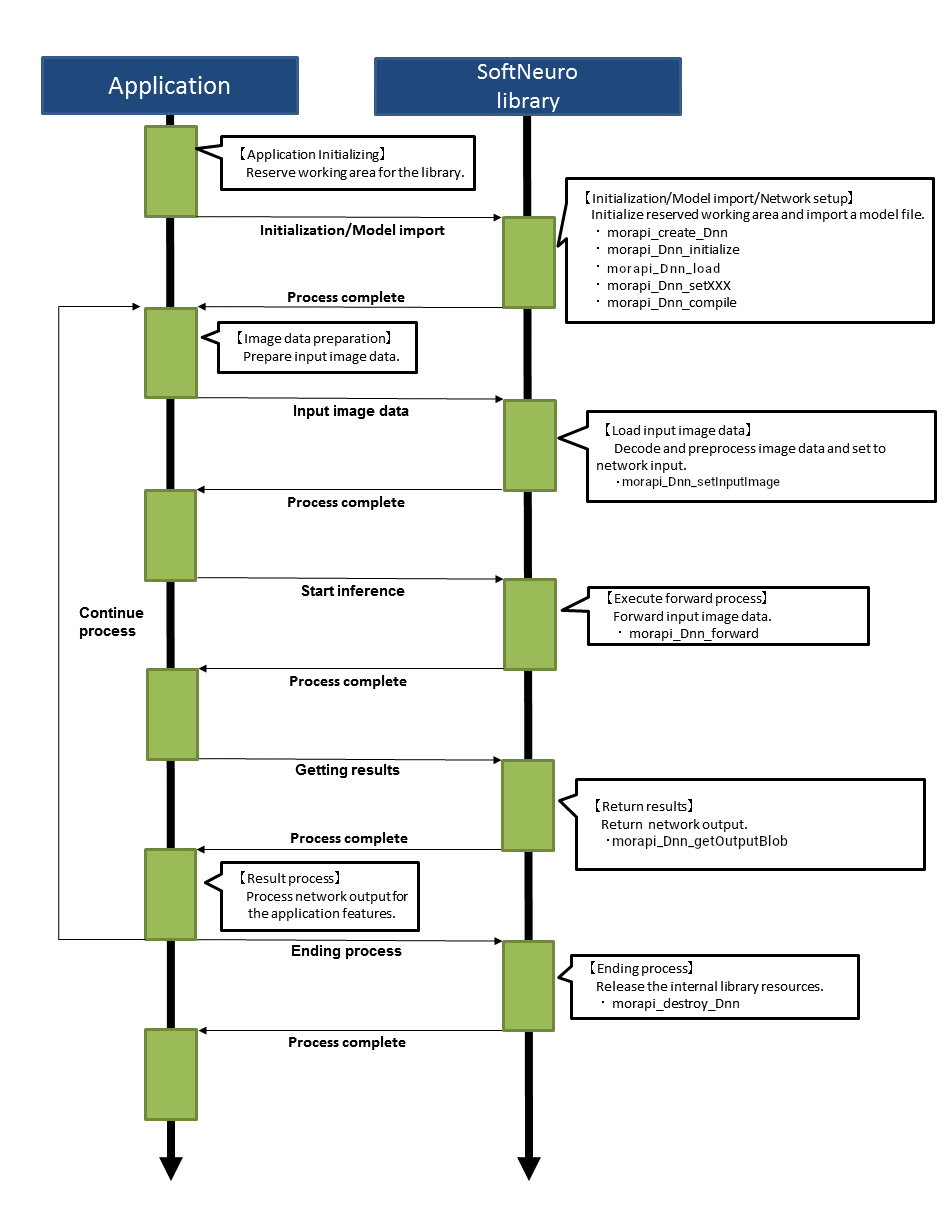

The following figure illustrates the process flow of communications between SoftNeuro and an application program.

More information on library processes can be viewed in this sample program.

Function return values are explained in morapi_Dnn reference.

|

| Fig.4 Library Process Flow |

4. Memory usage

The memory usage varies upon model parameters.

For further information, contact our support team.

5. Model parameters

Trained model parameters are preserved in a binary file, which then can be used for the task the model was trained for, such as object detection and classification.

This feature is implemented with deep learning technology, one of many machine learning methods.

For further information, contact our support team.